Play

Pause

Mute

Mute

Mute

Unmute

Fragile is an audio-visual installation that aims to investigate the relationship between stressful human experience and the transformations that occur in our brain.

Recent scientific research has shown that neurons belonging to different areas of our brain are affected by stress. In particular, stress causes changes in neuron circuitry, impacting their plasticity, the ability to change through growth and reorganization.

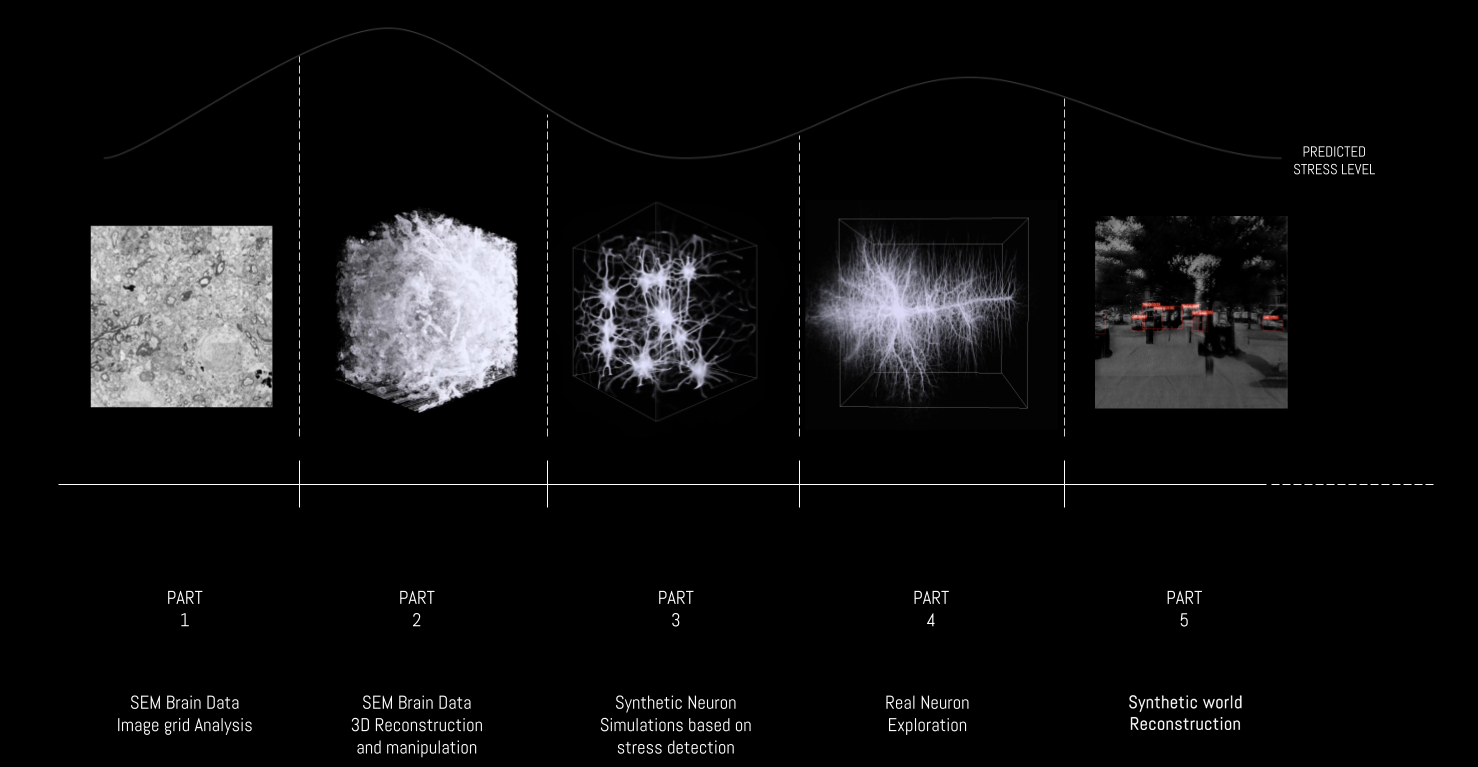

Fragile draws on scientific data from the Society for Neuroscience, reworking this information to reveal how external interactions shape our nervous system and, in turn, influence the way we connect with the world around us. In order to achieve this, we developed an artwork composed of different digital representations, branching into 5 screen projections. At the same time, a real-time algorithm constantly collects tweets and performs a prediction of the stress associated with each sentence. The values retrieved act as a global stress value for the community and are used continuously throughout the installation to interact with the audio-visual content.

The work seeks to portray the human brain’s remarkable capacity to adapt and reshape itself through experience, in its ongoing pursuit of balance: a balance to be cherished and protected as something rare, precious, and inherently fragile.

Play

Pause

Mute

Mute

Mute

Unmute

At the core of the process lies a set of algorithms designed to predict stress levels from social media messages. Using a dataset of 20,000 sentences classified as either “stressful” or “neutral,” we trained a convolutional neural network capable of estimating the stress associated with real-time tweets posted by people at any given moment.

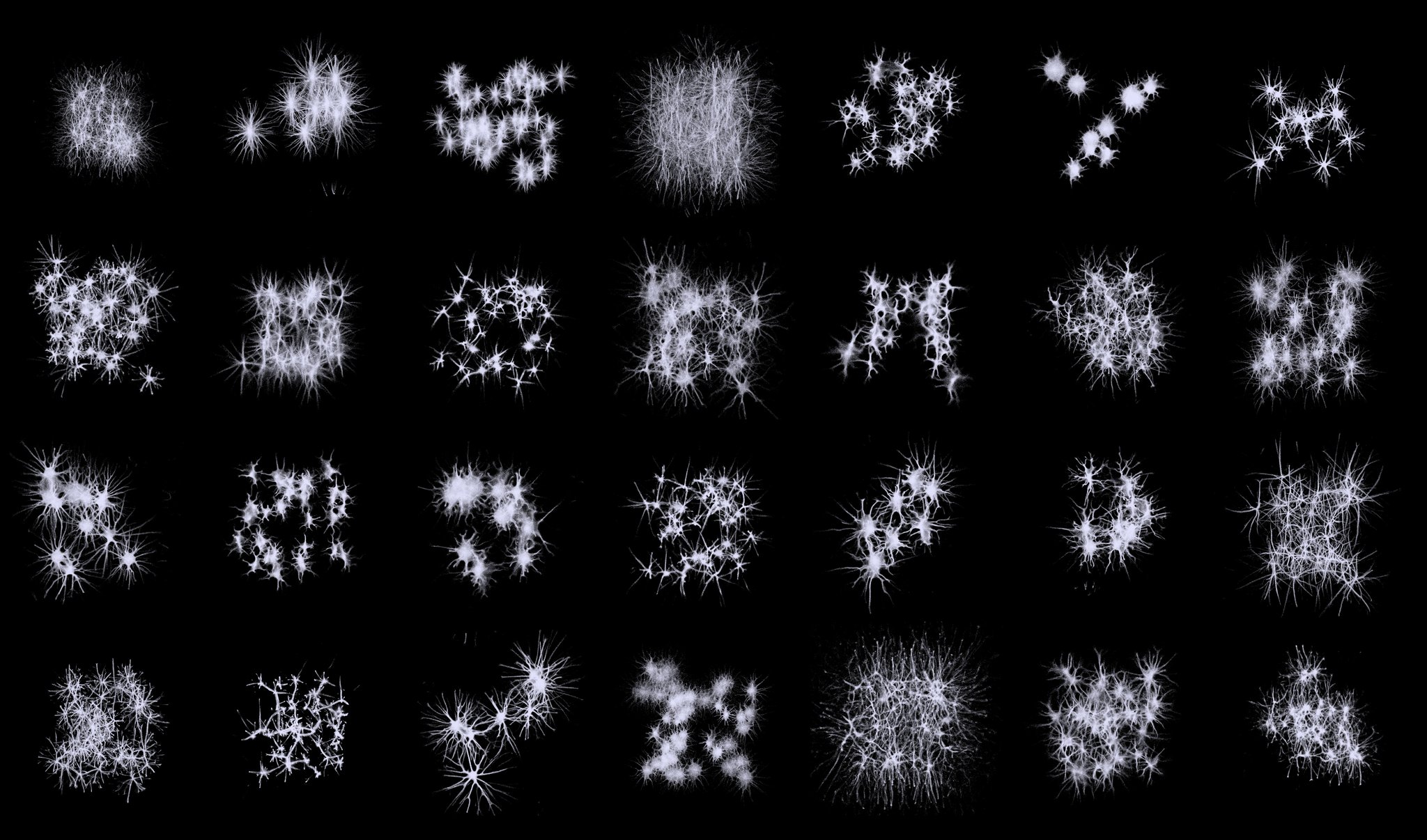

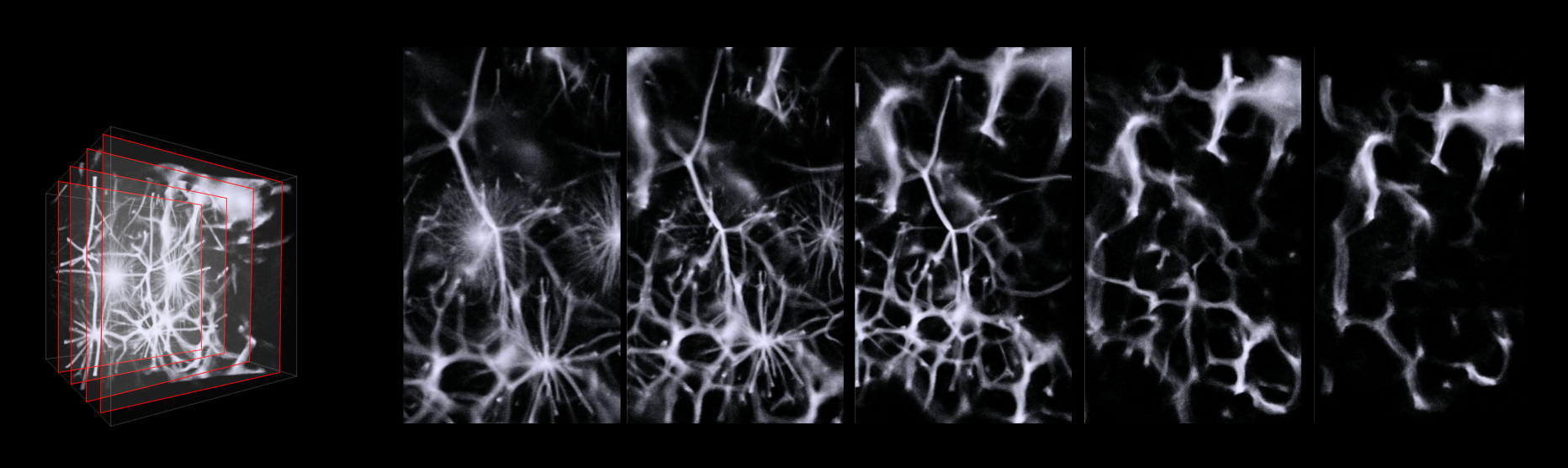

Our aim has been to define a global stress level that can be used as a backbone for the variations occurring at audio-visual level. We developed a series of 3D representations that explore neuronal behavior, combining the collected data with custom digital simulations. These visualizations are continuously shaped in real time by the social stress values retrieved. Hence every time the installation restarts, the result can be different, showing a different interpretation of this complex phenomenon occurring inside our brain.

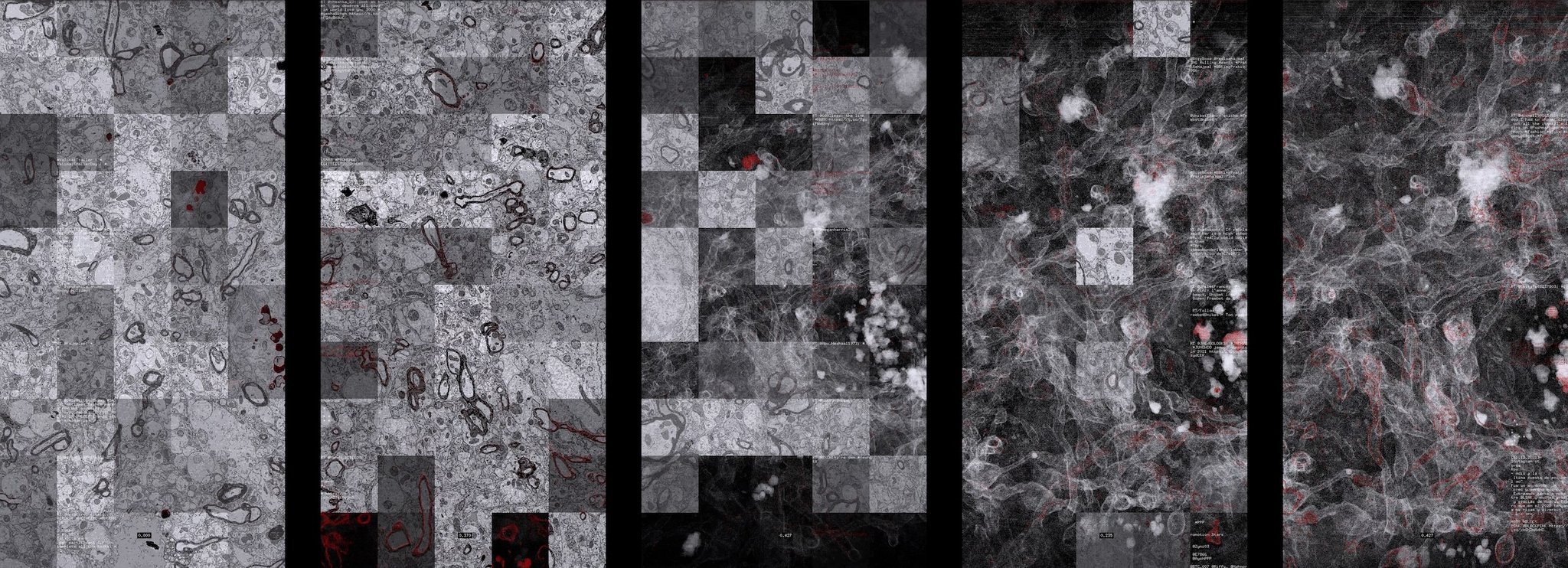

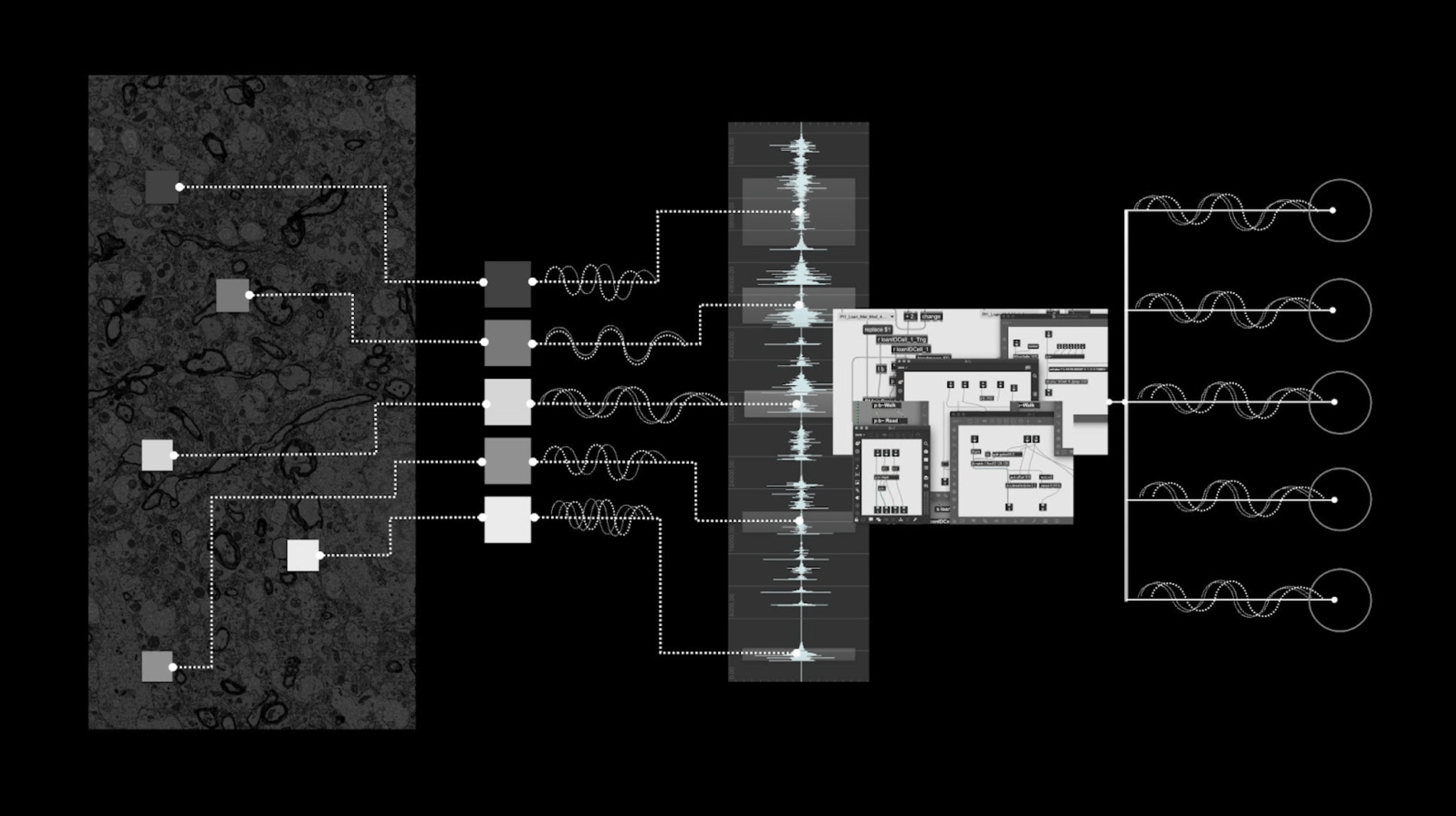

In the initial part, Fragile deep dives into the examination of the data collected and provided by the Society for Neuroscience, a series of SEM (scanning electron microscope) images of a human brain. We decided to visualize these sections images according to the predicted stress level from Twitter messages analysis. When negative stress is depicted, each section of the SEM images appears as a sequence of tiles with unsynchronized frames. Positive stress, instead, is represented through transitions from one section to another, where all tiles belong to the same section. This contrast conveys varying levels of stress among the people experiencing the installation.

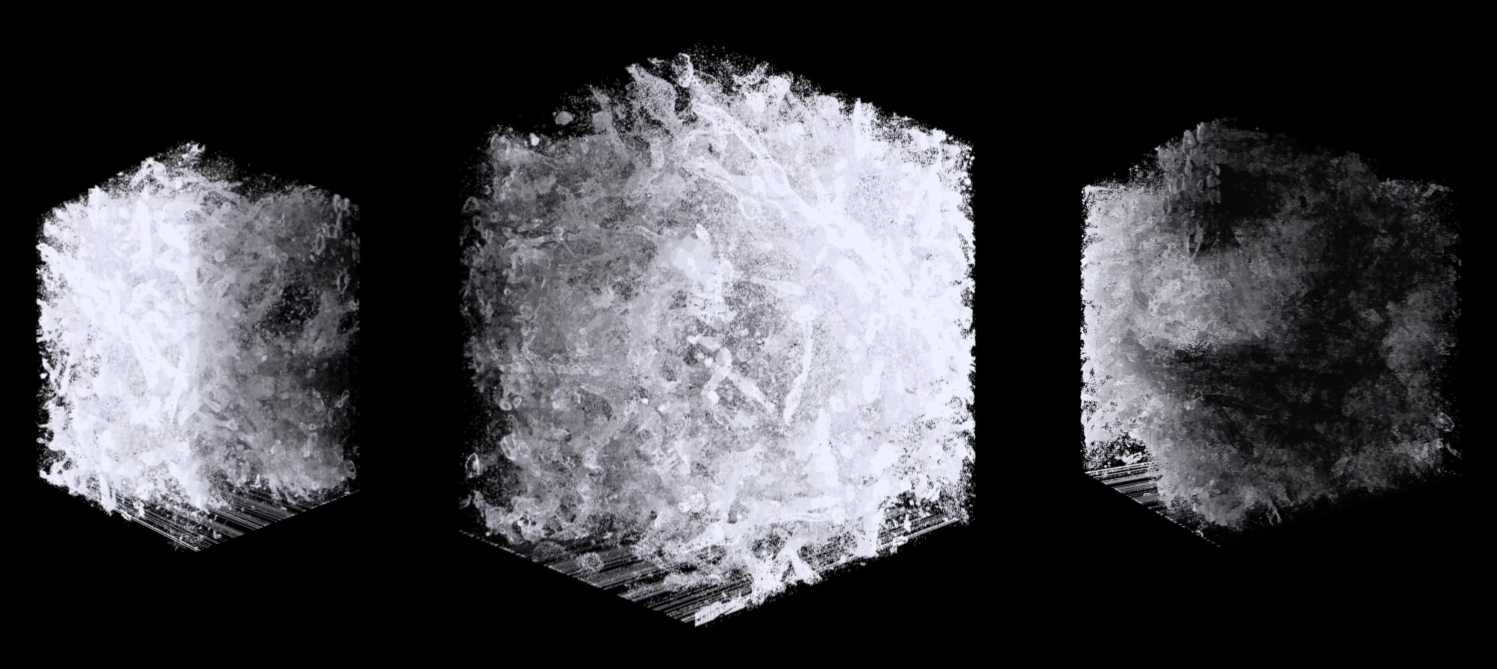

After the initial 2D flat representation, the installation transitions into a 3D visualization that reveals a portion of the human brain and its intricate beauty. A complex particle system reconstructs the brain’s form from 955 SEM sections, layered and rendered in real time with a volumetric shadow effect achieved through GLSL shaders. Once the reconstruction reaches its complete shape, it begins to gradually transform. In this new environment, we explored 3D particle simulations designed to visually echo the patterns that emerge when neurons alter their structure under the influence of a stressor. The pathways and connections between neurons can shift in striking and sometimes permanent ways. To reflect this, we defined a set of behaviors within our system that simulate specific patterns observed in actual neural circuitry.

These simulated neural representations then converge into the information retrieved by the neuron models extracted from the real human brain.

The final part of the installation shifts focus to another layer of perception: sight. We explored how vision interacts with the environment, reinterpreting it through deep learning techniques. Our goal was to create multiple versions of reality, fictional yet realistic enough to feel plausible, continuously shaped by real-time stress values.

To achieve this, we combined two deep neural networks. The first is a generative model, simulating the brain’s ability to reconstruct its surroundings by creating images of urban scenes trained on real photographs. The second is an object detection model, designed to identify objects within any given scene. The generation of synthetic images is guided by parameters influenced by the current stress level: higher stress leads to more distorted, harder-to-recognize images, while lower stress produces clearer, more realistic scenes with easily identifiable objects.

The soundscape for Fragile is semi-generative, combining real-time sound synthesis with pre-arranged layers and automations to maintain control over the narrative, structure, and dynamics of the piece.

The generative aspect is inspired by the concept of decomposition - breaking a single element into its constituent parts and then recombining them into a new layered structure with its own unique qualities. Analoue instrument recordings were processed through a custom 5-voice granular synthesis system (matching the number of screens), specifically designed for this installation.

What makes this approach distinctive is that some synthesis parameters, such as grain size, position, and volume, are driven by visual data from brain imagery. Five cursors move within these visualisations, detecting attributes like brightness and pixel location. This data is converted in real time to control and manipulate the sound, creating an intimate link between the audio and the evolving visuals.

Fragile is an artwork by fuse*

Artechouse DC / Washington DC, US