Play

Pause

Mute

Mute

Mute

Unmute

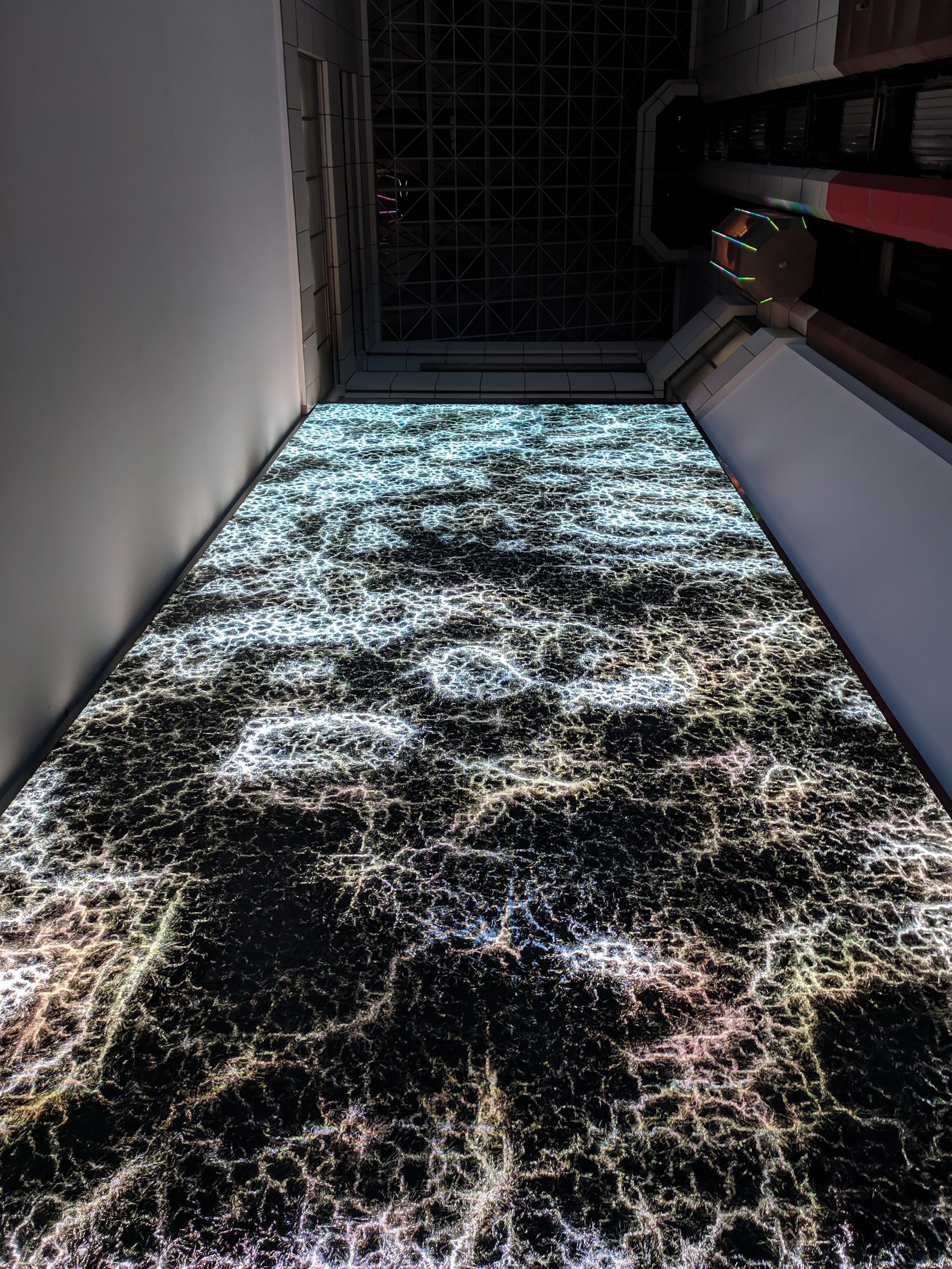

Mimesis is a large-scale installation which takes inspiration from the biological evolution of life on the planet while investigating the influence that anthropic action has on earth. It wants to explore our positive vision towards what can be preserved, changed or improved in our ecosystem and the consequences of our practices on different habitats. Since the beginning of our history, we as humans have tried to imitate and reproduce aspects of reality in order to create a meaningful representation of everything that surrounds us. How can we represent the scenario that is ahead of us, our future planet? Through this work, we try to reveal how the future will be influenced by the way we interact with the environment. In order to do this, we created a generative system which collects and elaborates data from the local environment where the installation is staged. These data constantly affect the visual and sonic outcome, creating an audio-visual generalization of the future environment that we’ll inhabit.

Play

Pause

Mute

Mute

Mute

Unmute

This concept is not understood just in the mere sense of reproduction or an illusory image of nature, but rather as a manifestation. Thanks to the real-time interaction of data from atmospheric measurement, nature appears in the digital image to be intensified in its processuality and unpredictability. Thus, Mimesis not only gives back an unprecedented knowledge of the aspects of nature that usually escape the vision, but also increases awareness of the effects of human action on the environment.

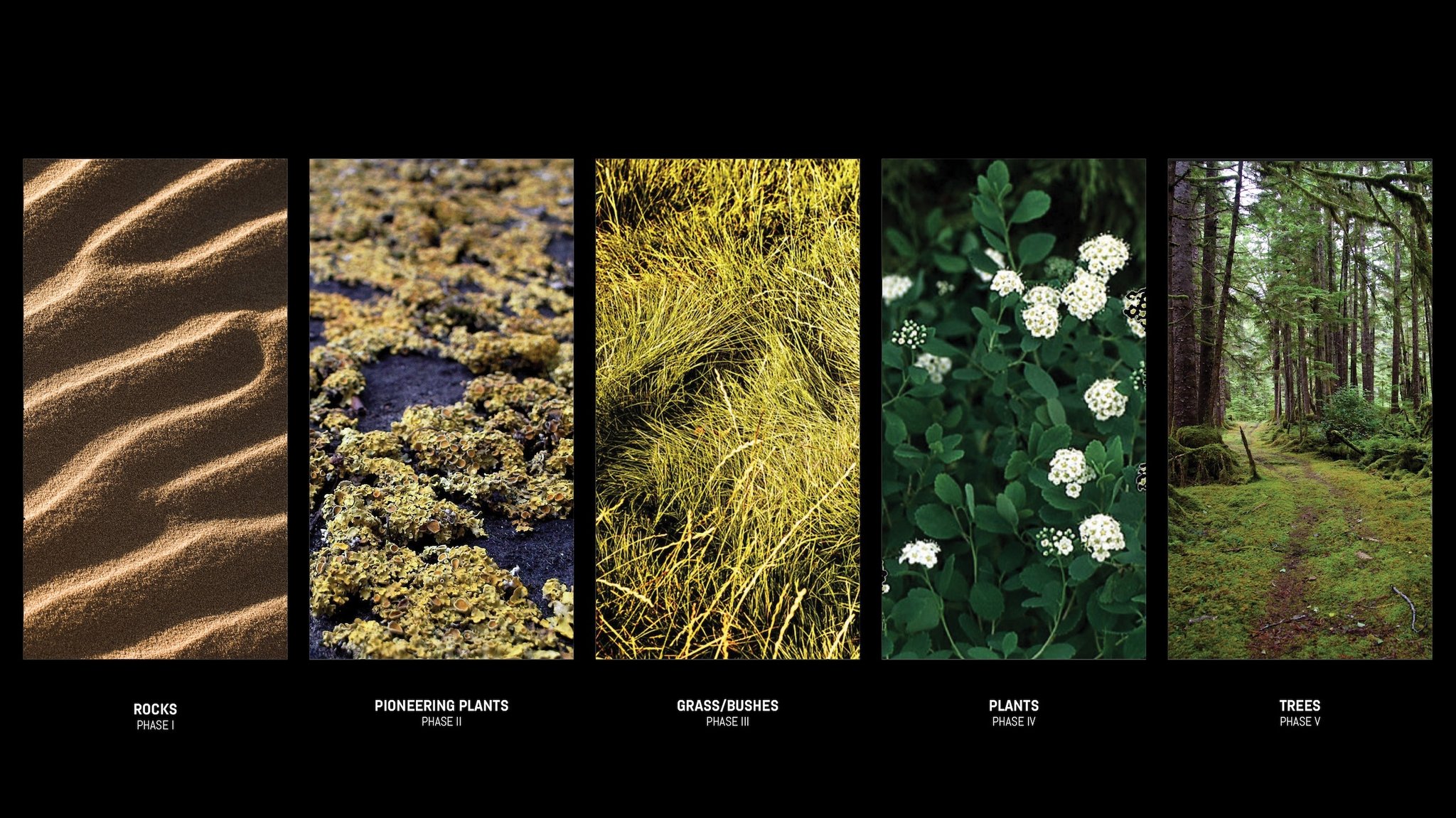

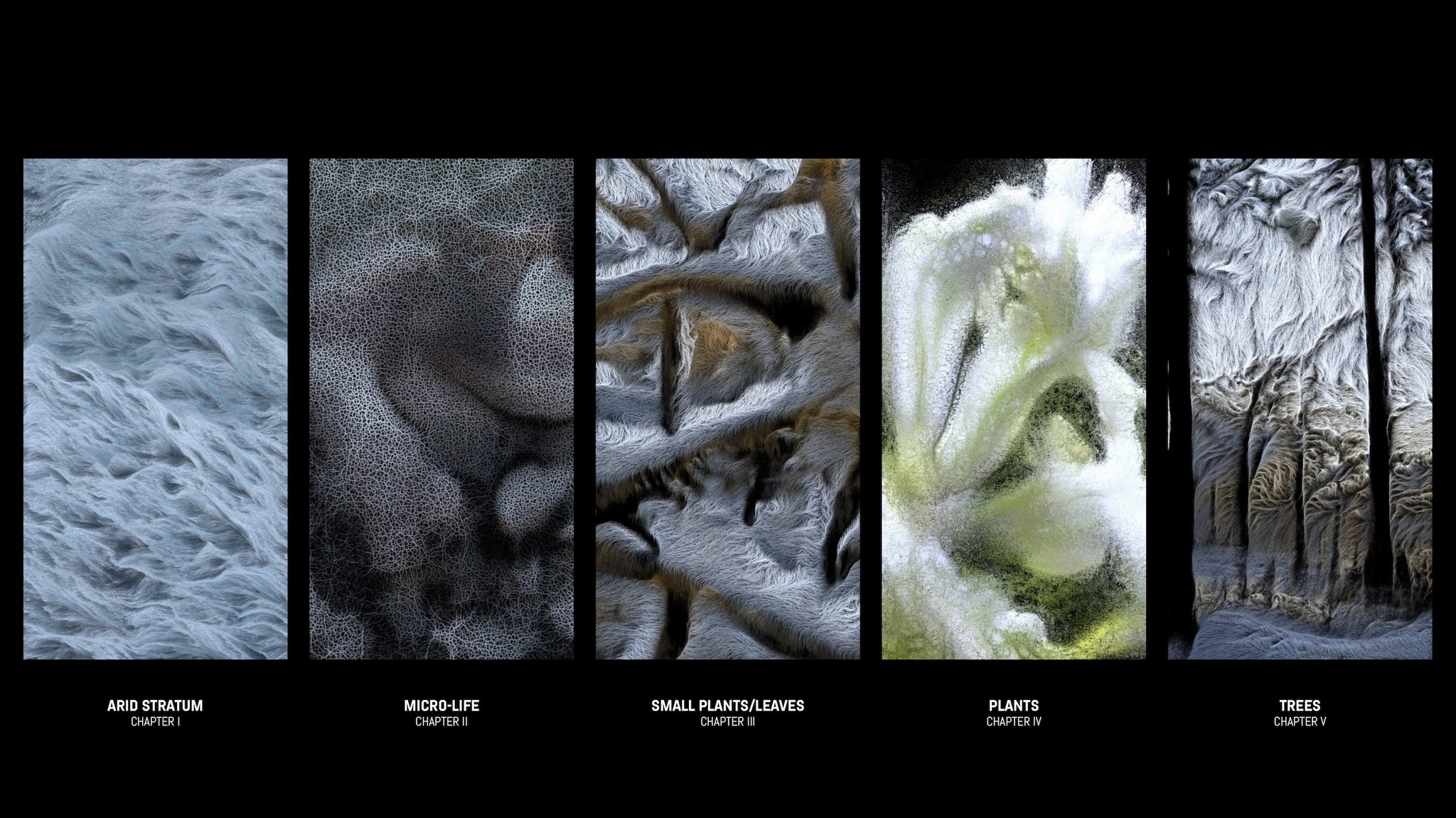

The main theme around which the narration develops is the concept of Primary ecological succession, the process that occurs with the formation of new lands and the exposure of a new habitat that can be colonised for the first time, as happens following the eruption of volcanoes or after violent floods. The soil is affected by changes with the deposition of organic matter and a gradual increase in the number and diversity of species in the ecosystem.

It is often subdivided into stages where different species find their way in according to the particular conditions; first, we find pioneer plants such as mosses, algae, fungi and lichens, followed by herbaceous plants, then grasses and shrubs, which precede the appearance of more robust plants such as shade-tolerant trees. At each stage, new species move into an area, often due to changes to the environment made by the preceding species, and may replace their predecessors. At some point, the community may reach a relatively stable state and stop changing in composition. We can consider this process as a gradual growth of an ecosystem over a long period of time.

In Mimesis, this concept is addressed and investigated through a digital reinterpretation. We outlined five chapters, each corresponding to the different phases of the succession, that synthesize the ecological succession that is the starting point of a one-hour-long process that tries to create a new form of life from the simplest conditions possible.

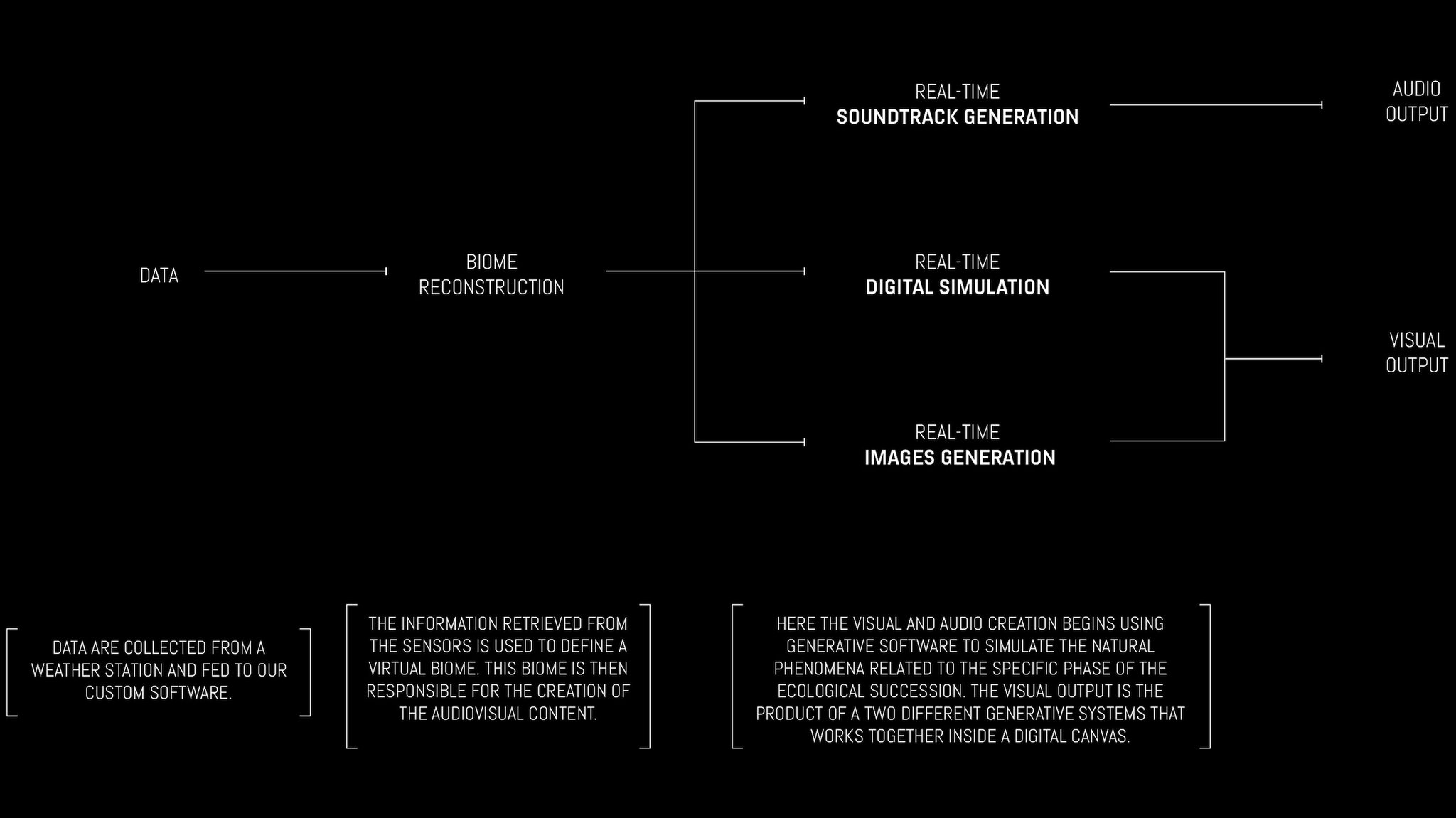

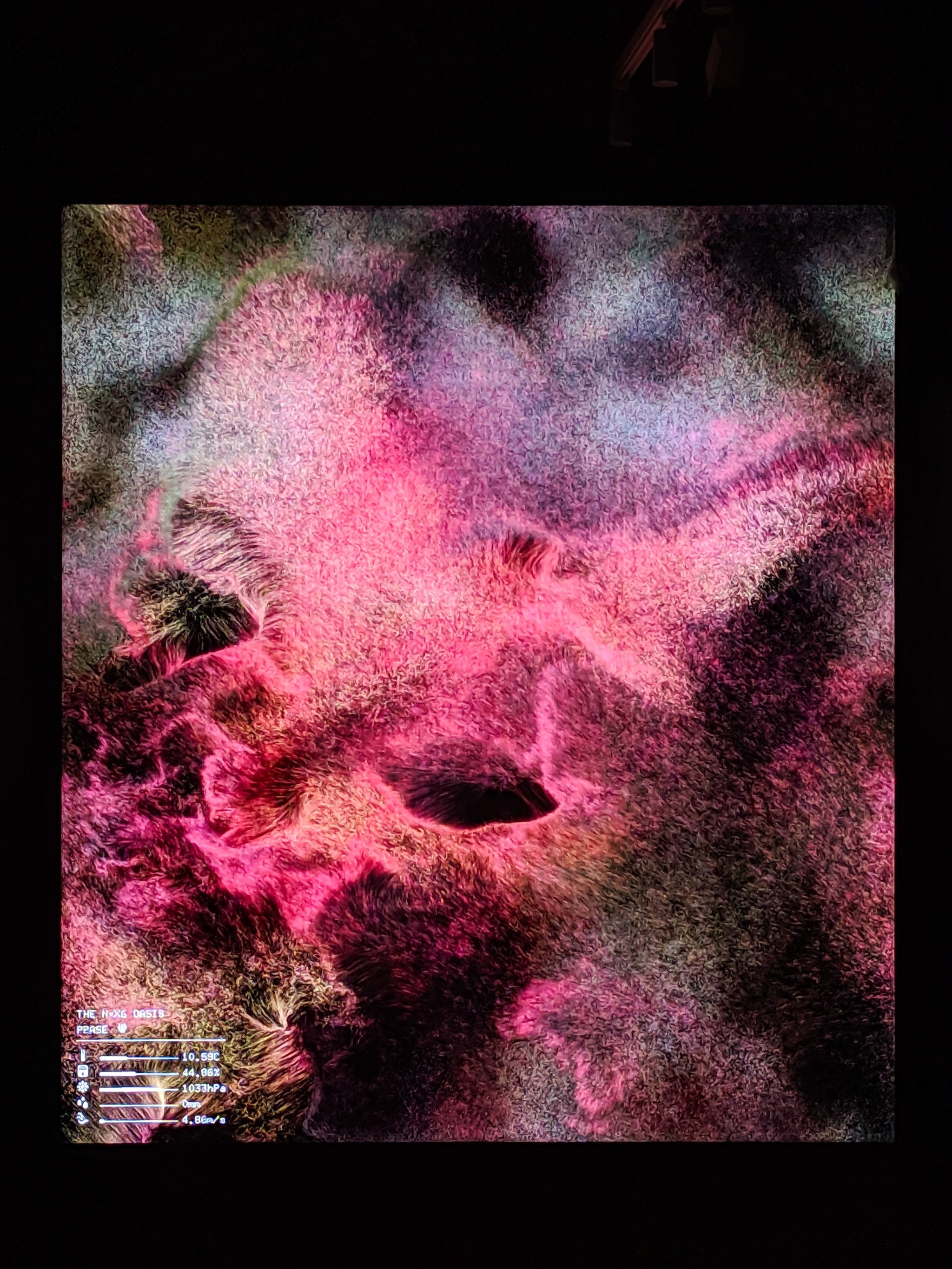

These conditions change continuously throughout the day since they mirror the climatic conditions of the city where the installation is staged. The information retrieved from the sensors is then used to create a biome, which is a large naturally occurring community of flora and fauna occupying a major habitat. Each chapter is generated in real-time, both for the visual and the audio part, following the live-extracted data. The initial configurations of the local climate conditions lead to different paths for the ecological process, while the unpredictability of the weather will constantly modify the audiovisual outcome.

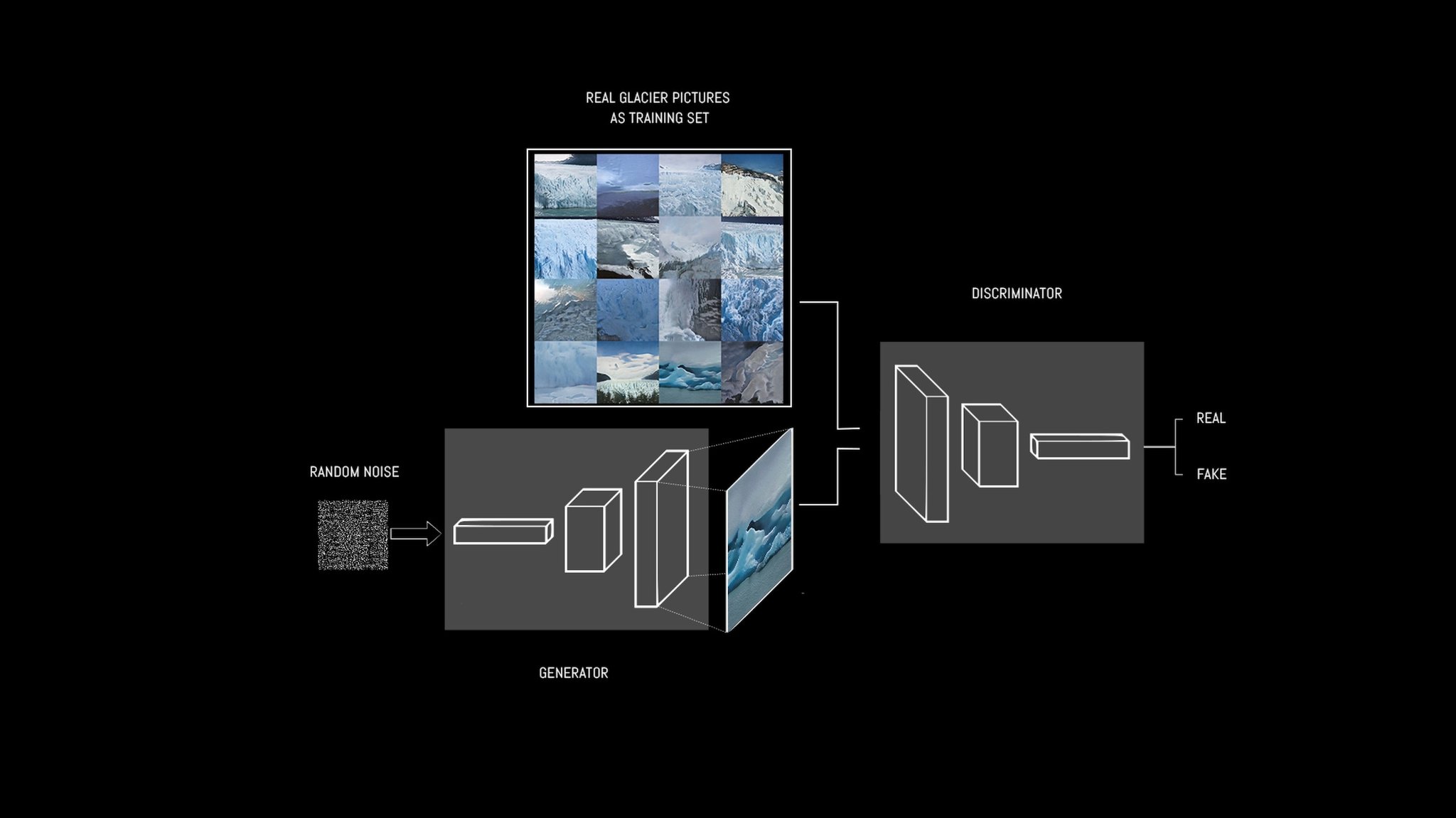

The digital simulations try to emulate different natural phenomena and patterns; in order to do that, several algorithms are used and applied in combination with one another. The visual outcome is also influenced by the use of specific software that exploits a machine learning architecture (GAN) that is specifically suited for the generation of synthetic images. Therefore, specific GANs (Generative Adversarial Networks) are used to generate new artificial images for each theme of the ecological succession. The result of this process is fed into the software and constantly affects the final visual result.

The generation of new imagery is only part of the software that processes the visual outcome. For this reason, the same weather data collection influences the audiovisual result by changing the way digital landscapes evolve throughout the day.

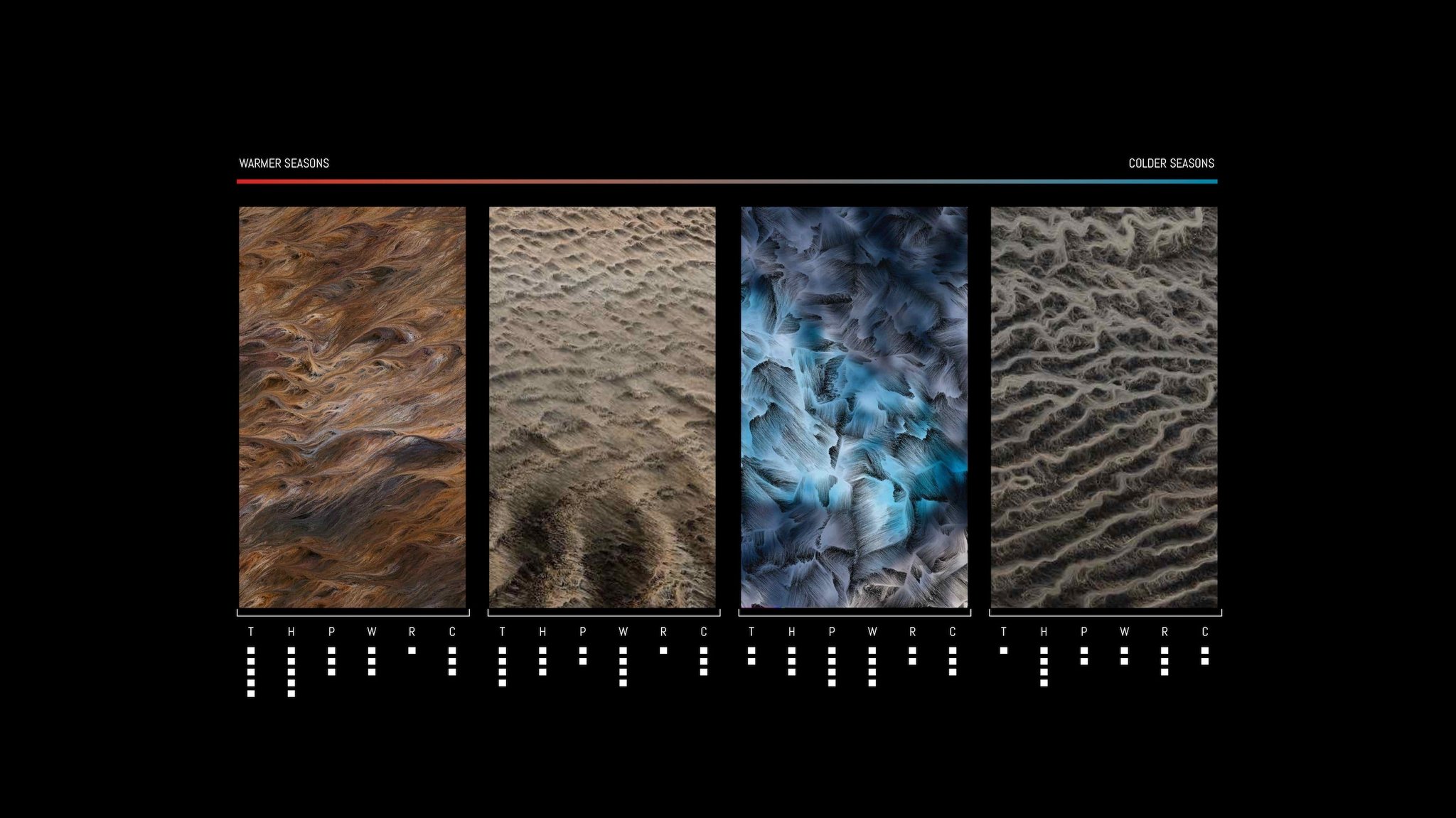

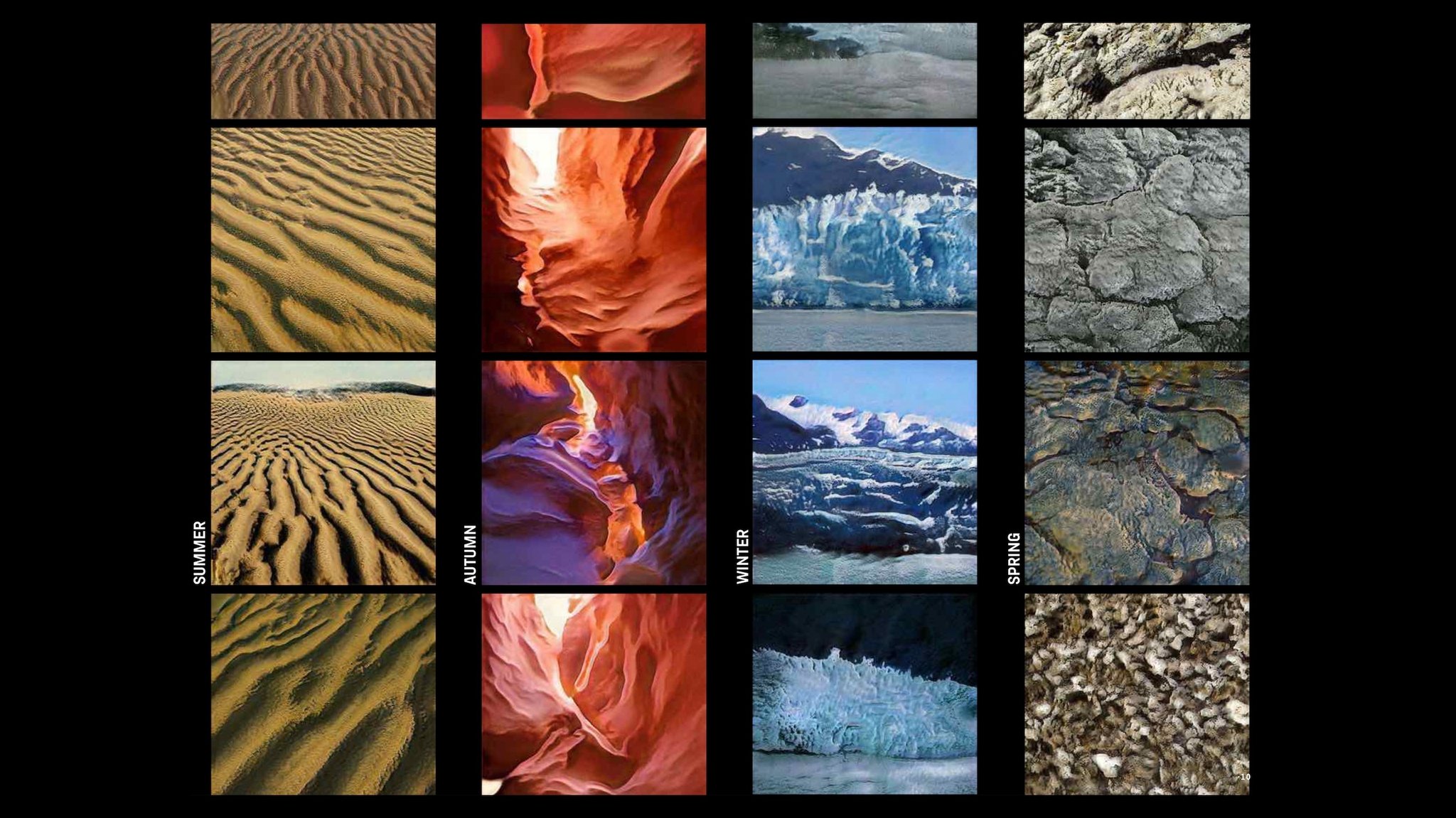

The meteorological data influence the content on a longer time scale over the year. In particular, each phase of the ecological succession is represented by different scenarios or every season. On the right, fuse* brings the example of the first phase, in which a series of inhospitable terrains are generated thanks to the AI software. In this case, during summer, the main generation will revolve around the theme of desert landscapes and these will transform into canyons, glaciers, and rocks throughout the different seasons. All the smaller changes in the parameters during the day will affect the result in a lower hierarchical order. The same concept is then applied to every other chapter of the succession. For this reason, the visual outcome is very different from one season to another and this choice also represents the fact that life might emerge from a diverse set of conditions.

The musical component of the installation is a generative system created with Max/MSP integrated into Ableton Live. For each phase of the digital ecological succession, it has been created a soundscape by modelling in real-time sound recording of natural elements. The notes generation starts with the procedure of selection of the scale and tone, which can be ordered according to a criterion of colour/expressivity, starting from the one with a darker texture to the more brilliant one. Every mode has its own colour. The selection of the scales changes according to the season, starting from lighter tones in spring and summer, going towards darker/colder colours for the autumn and winter. Every note of the list has its own weight/probability of being played, its percentage can vary over time or change based on the collected data. The height of the octave of the note follows the same principle.

The generated notes are used to control the intonation of the filter of an instrument of physical modelling, thus controlling the original sound source. The synthesis of the physical modelling refers to methods of sound synthesis in which the waveform of the generated sound is calculated using a mathematical model, a series of equations and algorithms that are capable of simulating a physical sound source, usually a musical instrument. The parameters used for the modelling can change over time based on the collected data or by values extracted from the visual generative process.

Mimesis is an artwork by fuse*